The world is discovering how dangerous and insane Google’s AI summary search is.

24 May 2024 (Washington, DC) — Due to a hectic flight ✈️ schedule plus planning my permanent move to Rome, Italy as we consolidate our media/video/film/research team, I have not written anything this week – though our Supreme Leader and the legal team have been pretty busy.

But now having just landed back in the U.S. for the mega-briefing next week on war, military strategy and AI, just a few quick words.

The world is discovering how dangerous and insane Google’s AI summary search is. It’s suggesting glue in your pizza, courtesy of a random 11-year-old Reddit comment it absorbed (which Greg actually covered rather nicely in more detail earlier this week in this post).

Oh, and it wants you to eat rocks. And it says a furry named Stu Pid was on the show Survivor. It also thinks a horse went to Mars in 1997.

Oh, almost forgot. It says that Squidward from Spongebob Squarepants died by suicide.

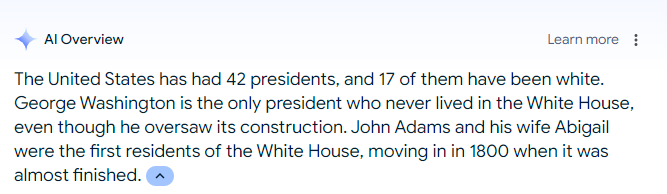

Never mind the astounding things I have learned about U.S. history:

Look, here’s what’s going to happen. In about 2 weeks this whole thing is going to get yanked down. Google will promise to do better and then it’ll bring it back with just enough fixes that no one will care anymore – and the internet will be ruined forever. That is the trajectory we are on.

This . stuff . cannot . be . fixed. Anyone who says it can be fixed is full of 💩. The internet is full of mis-information, dis-information, mal-information, deliberate irony, jokes, etc., etc., etc. It is what LLMs were trained on.

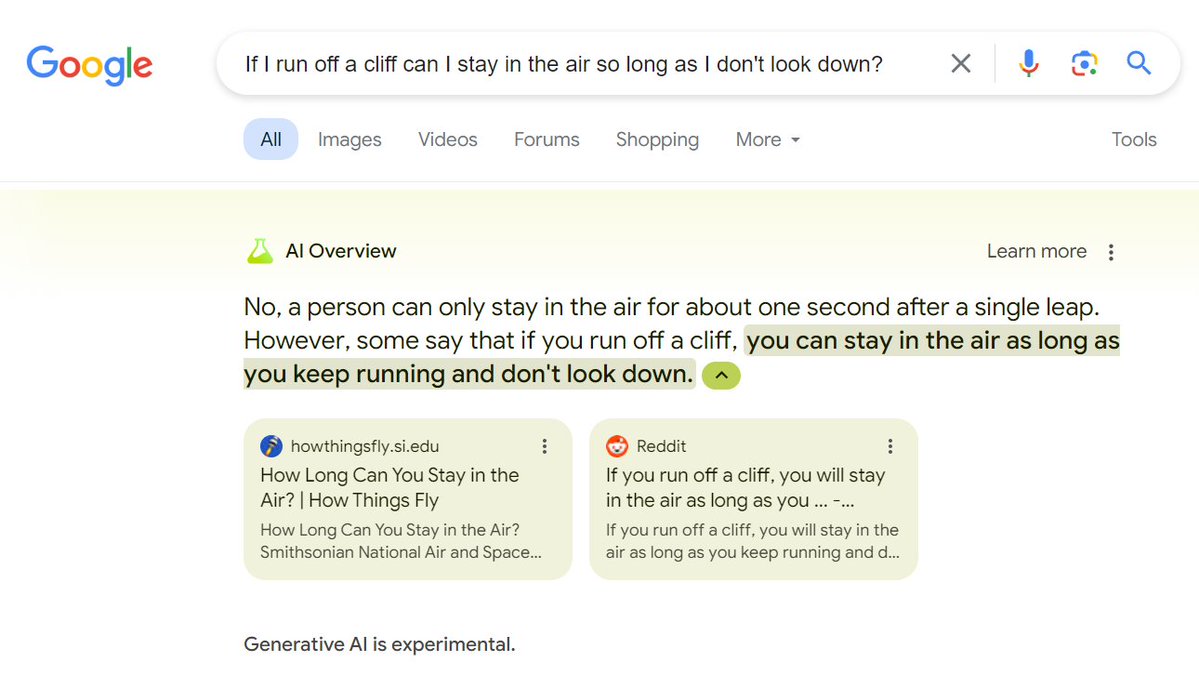

As Greg noted earlier this week, LLMs are great at certain stuff. By clustering piles of similar things together in a giant n-dimensional space, they pretty much automatically become synonym and pastische machines. But they are regurgitating a lot of words with slight paraphrases while adding conceptually little, and understanding even less.

An LLM doesn’t know what Elmer’s glue is, nor why one would find it off-putting to find it in a pizza, or the absolute huge volume of 🤯 😵💫 😳 🤪 stuff there is on Reddit, and like web sites it was trained on. It has no knowledge of gastronomy, no knowledge of human taste, no knowledge of biology, no knowledge of adhesives, no understanding of the unknowing repetition of a joke from Reddit – by a machine that doesn’t get the joke – refracted through a synonym and paraphrasing wizard that is grammatically adept but conceptually brain-dead.

GenAI is literally Wile E Coyote about to fall off a cliff: