A new information age is dawning

27 May 2024 (Washington, DC) — Last week Google debuted its “AI Overviews”, a box which summarizes some users’ search results using genAI. It is less than reliable so far, as dozens of examples shared on social media have shown.

For example, as our boss Gregory Bufithis pointed out in his post, its AI overviews have recommended adding glue to a pizza to stop cheese from sliding off, and it provided a rather “intriguing” history of the U.S. Presidents. Me? I liked the answer that seemed to suggest a depressed person could jump off the Golden Gate Bridge.

When (ok, if) AI Overviews become reliable, they’ll change the web experience. Users will spend less time clicking and visiting websites as Google search summaries improve and become useful enough. The few remaining clicks may increasingly go to the highest bidder, as ads start to appear in the summaries.

Companies including OpenAI are on a quest for licensing deals with publishers, some of which have already succeeded (Axel Springer SE, Newscorp), but as journalist Hamilton Nolan points out, this is akin to “selling your own house for firewood”. A leaked deck from OpenAI’s “Preferred Publisher Program” promises that members of the program will get priority placement and “richer brand expression” in chat conversations. Results from AI systems may be influenced by sponsored deals even more covertly.

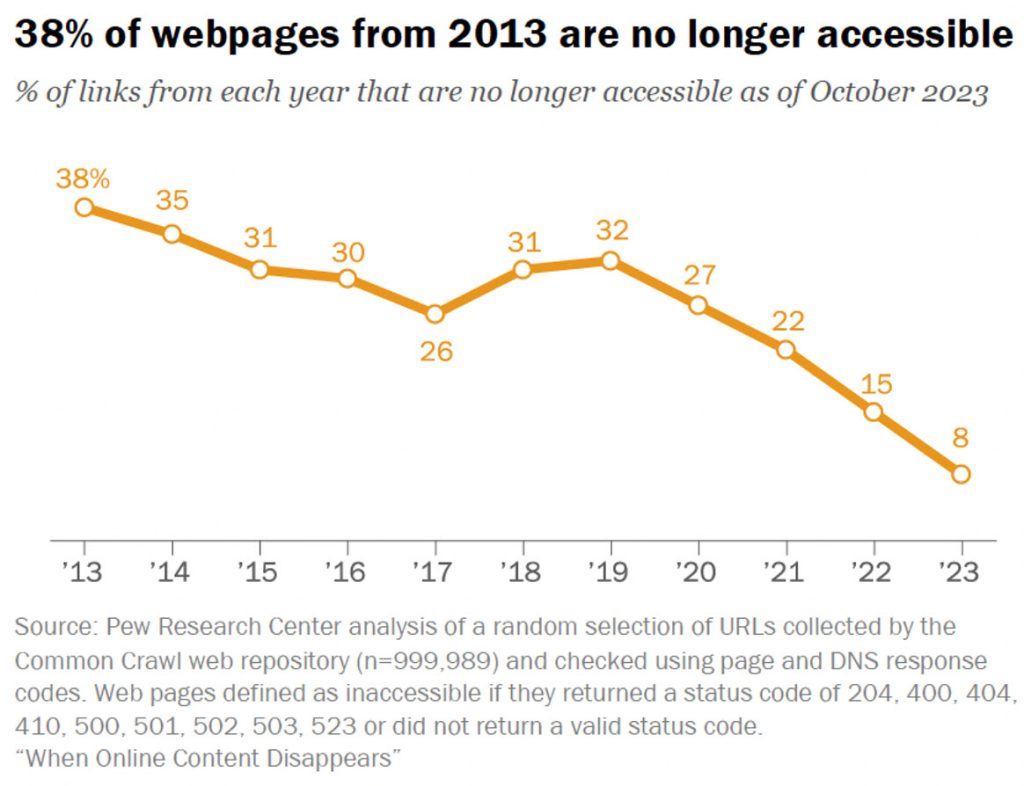

Set against this trend is the sad disappearance of the Web. One estimate shows that 38% of web pages from 2013 are no longer accessible.

There are many moving pieces, but it is clear that our online knowledge ecosystem is likely to undergo a radical shift. More and more of our information will become written and curated by AI, whose data provenance is unclear. Worse, the webs heritage may be lost as pages die, never to reach search indexes of language models.

Two things are needed in this emergent knowledge ecosystem:

First, a better understanding of how LLMs transform sources of information into structured outputs in an auditable way. Just this week, AI safety and research company Anthropic managed a breakthrough in this, more specifically what is called mechanistic interpretability. The team found that the model contained a map of related concepts that could be adjusted manually, pointing to a promising avenue of safety research. It is a fascinating paper that I urge you to read. It managed to get inside the “black box” of an LLM and work out some of the features and concepts it’s learned.

Second, there needs to be an active effort to maintain and preserve the Web’s rich information heritage. And, yes, that is going to be a huge task.

To our American readers, I trust you”ll remember your fallen soldiers today. We humbly suggest you read the thoughts of our boss, Greg Bufithis, on this Memorial Day. His experiences bring a view not many of us can muster.